Abstract

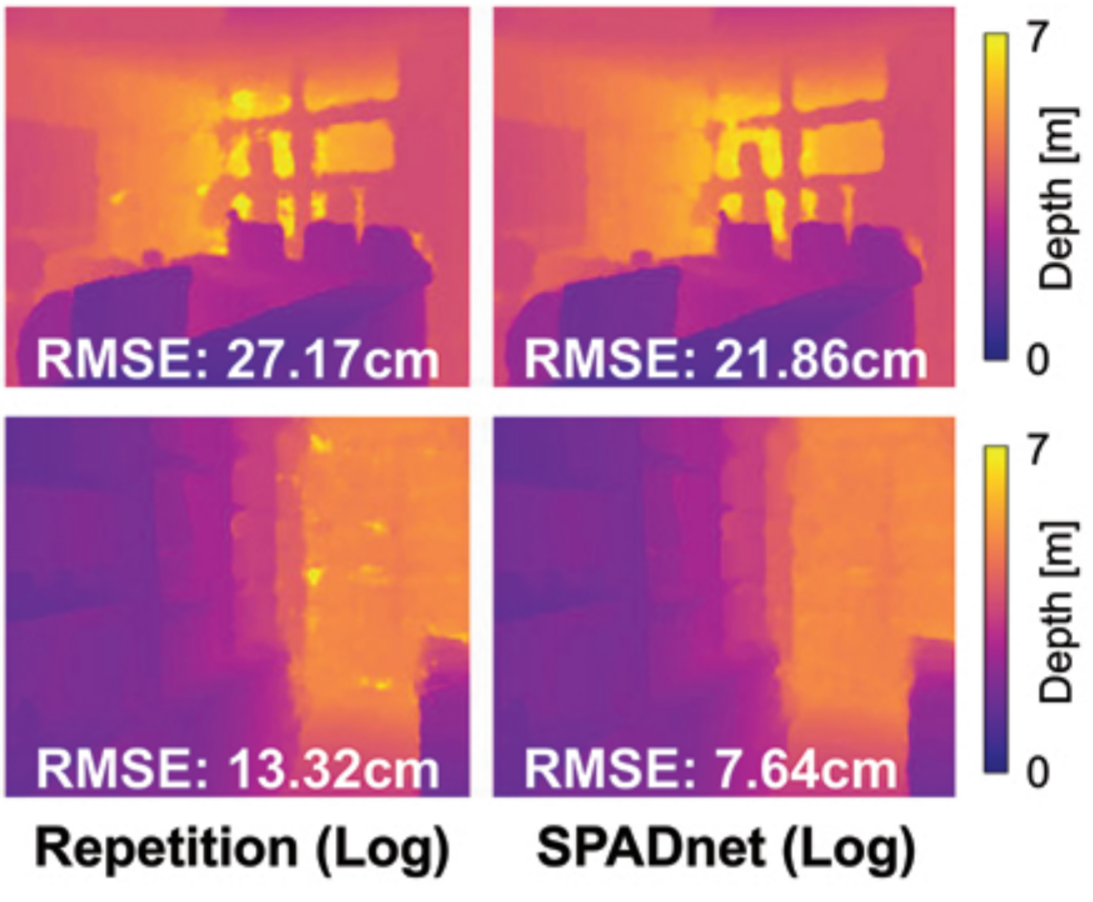

Single-photon light detection and ranging (LiDAR) techniques use emerging single-photon detectors (SPADs) to push 3D imaging capabilities to unprecedented ranges. However, it remains challenging to robustly estimate scene depth from the noisy and otherwise corrupted measurements recorded by a SPAD. Here, we propose a deep sensor fusion strategy that combines corrupted SPAD data and a conventional 2D image to estimate the depth of a scene. Our primary contribution is a neural network architecture—SPADnet—that uses a monocular depth estimation algorithm together with a SPAD denoising and sensor fusion strategy. This architecture, together with several techniques in network training, achieves state-of-the-art results for RGB-SPAD fusion with simulated and captured data. Moreover, SPADnet is more computationally efficient than previous RGB-SPAD fusion networks.

Citation

@article{sun2020spadnet,

title={{SPADnet}: deep {RGB-SPAD} sensor fusion assisted by monocular depth estimation},

author={Sun, Zhanghao and Lindell, David B and Solgaard, Olav and Wetzstein, Gordon},

journal={Optics Express},

volume={28},

number={10},

pages={14948--14962},

year={2020},

publisher={Optical Society of America}

}